De retour du FOSDEM 2019

Rédigé par dada / 07 février 2019 / Aucun commentaire

Rédigé par dada / 07 février 2019 / Aucun commentaire

Rédigé par dada / 14 janvier 2019 / 7 commentaires

apiVersion: apps/v1

kind: Deployment

metadata:

name: nextcloud-deployment

spec:

selector:

matchLabels:

app: nextcloud

replicas: 1

template:

metadata:

labels:

app: nextcloud

spec:

containers:

- name: nginx

image: nginx:1.15

ports:

- containerPort: 80

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

- name: pv-nextcloud

mountPath: /var/www/html

lifecycle:

postStart:

exec:

command: ["bin/sh", "-c", "mkdir -p /var/www/html"]

- name: nextcloud

image: nextcloud:14.0-fpm

ports:

- containerPort: 9000

volumeMounts:

- name: pv-nextcloud

mountPath: /var/www/html

resources:

limits:

cpu: "1"

volumes:

- name : nginx-config

configMap:

name: nginx-config

- name: pv-nextcloud

flexVolume:

driver: ceph.rook.io/rook

fsType: ceph

options:

fsName: myfs

clusterNamespace: rook-ceph

path: /nextcloud2

Il n'y a pas le Service associé pour la simple et bonne raison que chacun fait comme il le veut. Si vous êtes chez DigitalOcean, OVH ou chez un des GAFAM qui propose du k8s, vous aurez un LoadBalancer qui va bien. Si vous êtes comme moi, vous êtes réduit à faire du NodePort.

- name: nginx

image: nginx:1.15

ports:

- containerPort: 80

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

- name: pv-nextcloud

mountPath: /var/www/html

lifecycle:

postStart:

exec:

command: ["bin/sh", "-c", "mkdir -p /var/www/html"]

- name: pv-nextcloud

mountPath: /var/www/html

- name: nginx-config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-config

data:

nginx.conf: |

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

add_header X-Robots-Tag none;

add_header X-Download-Options noopen;

add_header X-Permitted-Cross-Domain-Policies none;

add_header Referrer-Policy no-referrer;

root /var/www/html;

location = /robots.txt {

allow all;

log_not_found off;

access_log off;

}

location = /.well-known/carddav {

return 301 $scheme://$host/remote.php/dav;

}

location = /.well-known/caldav {

return 301 $scheme://$host/remote.php/dav;

}

# set max upload size

client_max_body_size 10G;

fastcgi_buffers 64 4K;

# Enable gzip but do not remove ETag headers

gzip on;

gzip_vary on;

gzip_comp_level 4;

gzip_min_length 256;

gzip_proxied expired no-cache no-store private no_last_modified no_etag auth;

gzip_types application/atom+xml application/javascript application/json application/ld+json application/manifest+json application/rss+xml application/vnd.geo+json application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/bmp image/svg+xml image/x-icon text/cache-manifest text/css text/plain text/vcard text/vnd.rim.location.xloc text/vtt text/x-component text/x-cross-domain-policy;

location / {

rewrite ^ /index.php$request_uri;

}

location ~ ^/(?:build|tests|config|lib|3rdparty|templates|data)/ {

deny all;

}

location ~ ^/(?:\.|autotest|occ|issue|indie|db_|console) {

deny all;

}

location ~ ^/(?:index|remote|public|cron|core/ajax/update|status|ocs/v[12]|updater/.+|ocs-provider/.+)\.php(?:$|/) {

fastcgi_split_path_info ^(.+\.php)(/.*)$;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param PATH_INFO $fastcgi_path_info;

# fastcgi_param HTTPS on;

#Avoid sending the security headers twice

fastcgi_param modHeadersAvailable true;

fastcgi_param front_controller_active true;

fastcgi_pass 127.0.0.1:9000;

fastcgi_intercept_errors on;

fastcgi_request_buffering off;

}

location ~ ^/(?:updater|ocs-provider)(?:$|/) {

try_files $uri/ =404;

index index.php;

}

# Adding the cache control header for js and css files

# Make sure it is BELOW the PHP block

location ~ \.(?:css|js|woff|svg|gif)$ {

try_files $uri /index.php$request_uri;

add_header Cache-Control "public, max-age=15778463";

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

add_header X-Robots-Tag none;

add_header X-Download-Options noopen;

add_header X-Permitted-Cross-Domain-Policies none;

add_header Referrer-Policy no-referrer;

# Optional: Don't log access to assets

access_log off;

}

location ~ \.(?:png|html|ttf|ico|jpg|jpeg)$ {

try_files $uri /index.php$request_uri;

# Optional: Don't log access to other assets

access_log off;

}

}

}

dada@k8smaster1:~$ kubectl apply -f configmap.yaml

dada@k8smaster1:~$ kubectl apply -f nextcloud.yaml

dada@k8smaster1:~$ kubectl get pods

nextcloud-deployment-d6cbb8446-87ckf 2/2 Running 0 15h

Rédigé par dada / 08 novembre 2018 / 4 commentaires

apt-get install ceph-fs-common ceph-common

cd /bin

sudo curl -O https://raw.githubusercontent.com/ceph/ceph-docker/master/examples/kubernetes-coreos/rbd

sudo chmod +x /bin/rbd

rbd #Command to download ceph images

dada@k8smaster:~$ helm repo add rook-beta https://charts.rook.io/beta

"rook-beta" has been added to your repositories

dada@k8smaster:~$ helm install --namespace rook-ceph-system rook-beta/rook-ceph

NAME: torrid-dragonfly

LAST DEPLOYED: Sun Nov 4 11:22:24 2018

NAMESPACE: rook-ceph-system

STATUS: DEPLOYED

dada@k8smaster:~$ kubectl --namespace rook-ceph-system get pods -l "app=rook-ceph-operator"

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-f4cd7f8d5-zt7f4 1/1 Running 0 2m25

dada@k8smaster:~$ kubectl get pods --all-namespaces -o wide | grep rook

rook-ceph-system rook-ceph-agent-pb62s 1/1 Running 0 4m10s 192.168.0.30 k8snode1 <none

rook-ceph-system rook-ceph-agent-vccpt 1/1 Running 0 4m10s 192.168.0.18 k8snode2 <none>

rook-ceph-system rook-ceph-operator-f4cd7f8d5-zt7f4 1/1 Running 0 4m24s 10.244.2.62 k8snode2 <none>

rook-ceph-system rook-discover-589mf 1/1 Running 0 4m10s 10.244.2.63 k8snode2 <none>

rook-ceph-system rook-discover-qhv9q 1/1 Running 0 4m10s 10.244.1.232 k8snode1 <none>

#################################################################################

# This example first defines some necessary namespace and RBAC security objects.

# The actual Ceph Cluster CRD example can be found at the bottom of this example.

#################################################################################

apiVersion: v1

kind: Namespace

metadata:

name: rook-ceph

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rook-ceph-cluster

namespace: rook-ceph

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster

namespace: rook-ceph

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: [ "get", "list", "watch", "create", "update", "delete" ]

---

# Allow the operator to create resources in this cluster's namespace

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster-mgmt

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: rook-ceph-cluster-mgmt

subjects:

- kind: ServiceAccount

name: rook-ceph-system

namespace: rook-ceph-system

---

# Allow the pods in this namespace to work with configmaps

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rook-ceph-cluster

namespace: rook-ceph

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rook-ceph-cluster

subjects:

- kind: ServiceAccount

name: rook-ceph-cluster

namespace: rook-ceph

---

#################################################################################

# The Ceph Cluster CRD example

#################################################################################

apiVersion: ceph.rook.io/v1beta1

kind: Cluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

# For the latest ceph images, see https://hub.docker.com/r/ceph/ceph/tags

image: ceph/ceph:v13.2.2-20181023

dataDirHostPath: /var/lib/rook

dashboard:

enabled: true

storage:

useAllNodes: true

useAllDevices: false

config:

databaseSizeMB: "1024"

journalSizeMB: "1024"

kubectl create -f cluster.yaml

dada@k8smaster:~/rook$ kubectl get pods -n rook-ceph -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

rook-ceph-mgr-a-5f6dd98574-tm9md 1/1 Running 0 3m3s 10.244.2.126 k8snode2 <none>

rook-ceph-mon0-sk798 1/1 Running 0 4m36s 10.244.1.42 k8snode1 <none>

rook-ceph-mon1-bxgjt 1/1 Running 0 4m16s 10.244.2.125 k8snode2 <none>

rook-ceph-mon2-snznb 1/1 Running 0 3m48s 10.244.1.43 k8snode1 <none>

rook-ceph-osd-id-0-54c856d49d-77hfr 1/1 Running 0 2m27s 10.244.1.45 k8snode1 <none>

rook-ceph-osd-id-1-7d98bf85b5-rt4jw 1/1 Running 0 2m26s 10.244.2.128 k8snode2 <none>

rook-ceph-osd-prepare-k8snode1-dzd5v 0/1 Completed 0 2m41s 10.244.1.44 k8snode1 <none>

rook-ceph-osd-prepare-k8snode2-2jgvg 0/1 Completed 0 2m41s 10.244.2.127 k8snode2 <none>

apiVersion: ceph.rook.io/v1beta1

kind: Pool

metadata:

name: replicapool

namespace: rook-ceph

spec:

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: ceph.rook.io/bloc

parameters:

pool: replicapool

clusterNamespace: rook-ceph

kubectl create -f storageclass.yaml

dada@k8smaster:~/rook$ cat dashboard-external.yaml

apiVersion: v1

kind: Service

metadata:

name: rook-ceph-mgr-dashboard-external

namespace: rook-ceph

labels:

app: rook-ceph-mgr

rook_cluster: rook-ceph

spec:

ports:

- name: dashboard

port: 7000

protocol: TCP

targetPort: 7000

selector:

app: rook-ceph-mgr

rook_cluster: rook-ceph

sessionAffinity: None

type: NodePort

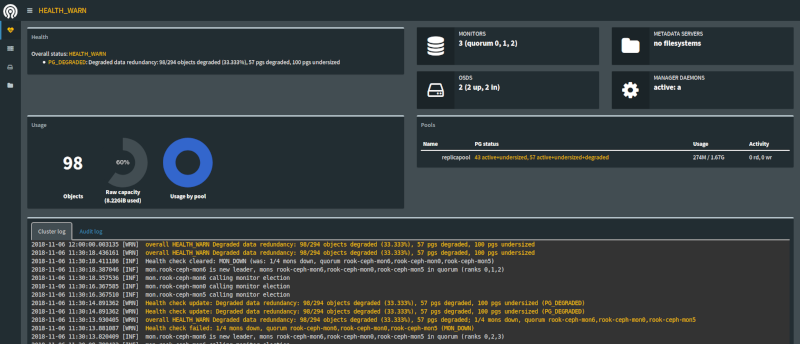

dada@k8smaster:~/rook$ kubectl -n rook-ceph get service | grep Node

rook-ceph-mgr-dashboard-external NodePort 10.99.88.135 <none> 7000:31165/TCP 3m41s

La gestion du système de fichiers que je vous propose n'est pas sans risque. Les volumes que vous allez créer doivent être configurés sérieusement. Les exemples que vous aller trouver par-ci par-là vous permettront d'avoir un stockage dans votre cluster k8s, certes, mais rendront sans doute vos volumes dépendants de vos pods. Si vous décidez de supprimer le pod pour lequel vous avec un PVC, le PV disparaîtra, et vos données avec.

Prenez le temps de bien réfléchir et de bien plus étudier la question que ce que je vous propose dans mes billets avant de vous lancer dans une installation en production.