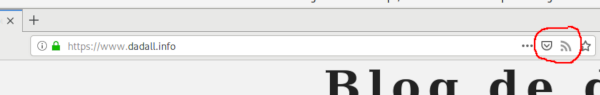

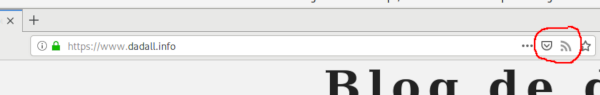

Récupérer ses flux RSS dans Firefox

Rédigé par dada / 02 janvier 2019 / 14 commentaires

Installation

Utilisation

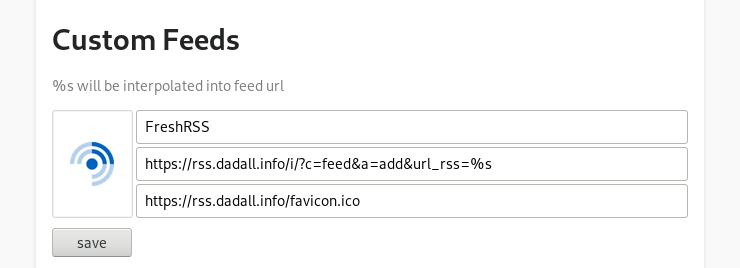

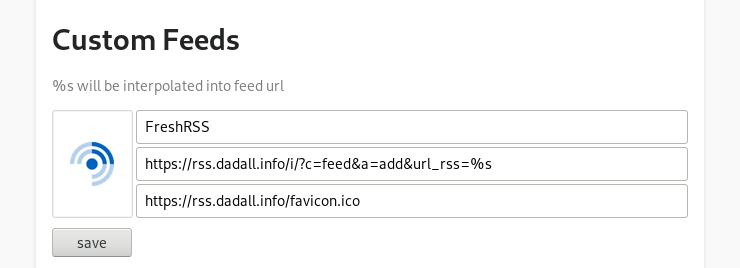

Configuration

Rédigé par dada / 02 janvier 2019 / 14 commentaires

Rédigé par dada / 20 décembre 2018 / 12 commentaires

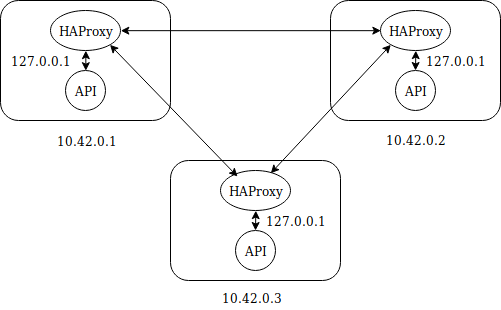

root@k8smaster1:~# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: stable

apiServer:

certSANs:

- "127.0.0.1"

controlPlaneEndpoint: "127.0.0.1:5443"

networking:

podSubnet: "10.244.0.0/16"

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS

ssl-default-bind-options no-sslv3

defaults

log global

mode tcp

option tcplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend api-front

bind 127.0.0.1:5443

mode tcp

option tcplog

use_backend api-backend

backend api-backend

mode tcp

option tcplog

option tcp-check

balance roundrobin

server master1 10.0.42.1:6443 check

server master2 10.0.42.2:6443 check

server master3 10.0.42.3:6443 check

root@k8smaster1:~# nc -v localhost 5443

localhost [127.0.0.1] 5443 (?) open

hatop -s /var/run/haproxy/admin.sock

kubeadm init --config=kubeadm-config.yaml

kubeadm join 127.0.0.1:5443 --token a1o01x.tokenblabla --discovery-token-ca-cert-hash sha256:blablablablalblawhateverlablablameans --experimental-control-plane

kubectl apply -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel.yml

dada@k8smaster1:~$ k get nodes

NAME STATUS ROLES AGE VERSION

k8smaster1 Ready master 12h v1.13.1

k8smaster2 Ready master 11h v1.13.1

k8smaster3 Ready master 11h v1.13.1

dada@k8smaster1:~$ k get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-86c58d9df4-cx4b7 1/1 Running 0 12h

kube-system coredns-86c58d9df4-xf8kb 1/1 Running 0 12h

kube-system etcd-k8smaster1 1/1 Running 0 12h

kube-system etcd-k8smaster2 1/1 Running 0 11h

kube-system etcd-k8smaster3 1/1 Running 0 11h

kube-system kube-apiserver-k8smaster1 1/1 Running 0 12h

kube-system kube-apiserver-k8smaster2 1/1 Running 0 11h

kube-system kube-apiserver-k8smaster3 1/1 Running 0 11h

kube-system kube-controller-manager-k8smaster1 1/1 Running 1 12h

kube-system kube-controller-manager-k8smaster2 1/1 Running 0 11h

kube-system kube-controller-manager-k8smaster3 1/1 Running 0 11h

kube-system kube-flannel-ds-amd64-55p4t 1/1 Running 1 11h

kube-system kube-flannel-ds-amd64-g7btx 1/1 Running 0 12h

kube-system kube-flannel-ds-amd64-knjk4 1/1 Running 2 11h

kube-system kube-proxy-899l8 1/1 Running 0 12h

kube-system kube-proxy-djj9x 1/1 Running 0 11h

kube-system kube-proxy-tm289 1/1 Running 0 11h

kube-system kube-scheduler-k8smaster1 1/1 Running 1 12h

kube-system kube-scheduler-k8smaster2 1/1 Running 0 11h

kube-system kube-scheduler-k8smaster3 1/1 Running 0 11h

Rédigé par dada / 01 décembre 2018 / Aucun commentaire

dada@master:~/proxysql$ cat proxysql.yaml

apiVersion: v1

kind: Deployment

metadata:

name: proxysql

labels:

app: proxysql

spec:

replicas: 2

selector:

matchLabels:

app: proxysql

tier: frontend

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: proxysql

tier: frontend

spec:

restartPolicy: Always

containers:

- image: severalnines/proxysql:1.4.12

name: proxysql

volumeMounts:

- name: proxysql-config

mountPath: /etc/proxysql.cnf

subPath: proxysql.cnf

ports:

- containerPort: 6033

name: proxysql-mysql

- containerPort: 6032

name: proxysql-admin

volumes:

- name: proxysql-config

configMap:

name: proxysql-configmap

---

apiVersion: v1

kind: Service

metadata:

name: proxysql

labels:

app: proxysql

tier: frontend

spec:

type: NodePort

ports:

- nodePort: 30033

port: 6033

name: proxysql-mysql

- nodePort: 30032

port: 6032

name: proxysql-admin

selector:

app: proxysql

tier: frontend

dada@master:~/proxysql$ kubectl apply -f proxysql.yaml

dada@master:~/proxysql$ kubectl get pods --all-namespaces | grep proxysql

default proxysql-5c47fb85fb-fdh4g 1/1 Running 1 39h

default proxysql-5c47fb85fb-kvdfv 1/1 Running 1 39h

datadir="/var/lib/proxysql"

admin_variables=

{

admin_credentials="proxysql-admin:adminpwd"

mysql_ifaces="0.0.0.0:6032"

refresh_interval=2000

}

mysql_variables=

{

threads=4

max_connections=2048

default_query_delay=0

default_query_timeout=36000000

have_compress=true

poll_timeout=2000

interfaces="0.0.0.0:6033;/tmp/proxysql.sock"

default_schema="information_schema"

stacksize=1048576

server_version="5.1.30"

connect_timeout_server=10000

monitor_history=60000

monitor_connect_interval=200000

monitor_ping_interval=200000

ping_interval_server_msec=10000

ping_timeout_server=200

commands_stats=true

sessions_sort=true

monitor_username="proxysql"

monitor_password="proxysqlpwd"

}

mysql_replication_hostgroups =

(

{ writer_hostgroup=10, reader_hostgroup=20, comment="MariaDB Replication" }

)

mysql_servers =

(

{ address="192.168.0.17", port=3306, hostgroup=10, max_connections=100, max_replication_lag = 5 },

{ address="192.168.0.77", port=3306, hostgroup=20, max_connections=100, max_replication_lag = 5}

)

mysql_users =

(

{ username = "nextcloud" , password = "nextcloudpwd" , default_hostgroup = 10 , active = 1 }

)

mysql_query_rules =

(

{

rule_id=100

active=1

match_pattern="^SELECT .* FOR UPDATE"

destination_hostgroup=10

apply=1

},

{

rule_id=200

active=1

match_pattern="^SELECT .*"

destination_hostgroup=20

apply=1

},

{

rule_id=300

active=1

match_pattern=".*"

destination_hostgroup=10

apply=1

}

)

{

admin_credentials="proxysql-admin:adminpwd"

mysql_ifaces="0.0.0.0:6032"

refresh_interval=2000

}mysql_variables=

{

threads=4

max_connections=2048

default_query_delay=0

default_query_timeout=36000000

have_compress=true

poll_timeout=2000

interfaces="0.0.0.0:6033;/tmp/proxysql.sock"

default_schema="information_schema"

stacksize=1048576

server_version="5.1.30"

connect_timeout_server=10000

monitor_history=60000

monitor_connect_interval=200000

monitor_ping_interval=200000

ping_interval_server_msec=10000

ping_timeout_server=200

commands_stats=true

sessions_sort=true

monitor_username="proxysql"

monitor_password="proxysqlpwd"

}

mysql_replication_hostgroups =

(

{ writer_hostgroup=10, reader_hostgroup=20, comment="MariaDB Replication" }

)

mysql_servers =

(

{ address="192.168.0.17", port=3306, hostgroup=10, max_connections=100, max_replication_lag = 5 },

{ address="192.168.0.77", port=3306, hostgroup=20, max_connections=100, max_replication_lag = 5}

)

mysql_users =

(

{ username = "nextcloud" , password = "nextcloudpwd" , default_hostgroup = 10 , active = 1 }

)

mysql_query_rules =

(

{

rule_id=100

active=1

match_pattern="^SELECT .* FOR UPDATE"

destination_hostgroup=10

apply=1

},

{

rule_id=200

active=1

match_pattern="^SELECT .*"

destination_hostgroup=20

apply=1

},

{

rule_id=300

active=1

match_pattern=".*"

destination_hostgroup=10

apply=1

}

dada@master:~/proxysql$ kubectl create configmap proxysql-configmap --from-file=proxysql.cnf

Si on prend le temps de revenir sur le YAML pour comprendre la ConfigMap, on la repère ici :

containers:

[...]

volumeMounts:

- name: proxysql-config

mountPath: /etc/proxysql.cnf

subPath: proxysql.cnf

[...]

volumes:

- name: proxysql-config

configMap:

name: proxysql-configmap

On comprend que les pods ProxySQL vont aller parcourir la liste des ConfigMaps disponibles pour repérer celle qui porte le nom "proxysql-config" et la monter dans /etc/proxysql.cnf.

Une commande que vous devriez connaître par cœur va nous prouver que tout fonctionne :

dada@master:~/proxysql$ kubectl logs proxysql-5c47fb85fb-fdh4g

Chez moi, elle sort quelque chose comme ça :

2018-12-01 08:30:19 [INFO] Dumping mysql_servers

+--------------+--------------+------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+-----------------+

| hostgroup_id | hostname | port | weight | status | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment | mem_pointer |

+--------------+--------------+------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+-----------------+

| 10 | 192.168.0.17 | 3306 | 1 | 0 | 0 | 100 | 5 | 0 | 0 | | 140637072236416 |

| 20 | 192.168.0.17 | 3306 | 1 | 0 | 0 | 100 | 5 | 0 | 0 | | 140637022769408 |

| 20 | 192.168.0.77 | 3306 | 1 | 0 | 0 | 100 | 5 | 0 | 0 | | 140637085320960 |

+--------------+--------------+------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+-----------------+

On y retrouve la liste des serveurs et leurs rôles : mon master appartient aux groupes reader et writer. Normal puisqu'il doit écrire et lire. Mon slave, lui, n'appartient qu'au groupe des reader, comme convenu.

MariaDB [(none)]> SHOW VARIABLES like 'read_only';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| read_only | ON |

+---------------+-------+

1 row in set (0.00 sec)

Rédigé par dada / 23 novembre 2018 / 2 commentaires

Là, à la manière d'APT, nous allons ajouter une source à Helm pour lui permettre d'installer ce qu'on lui demande.

helm repo add coreos https://s3-eu-west-1.amazonaws.com/coreos-charts/stable/

On enchaîne sur l'installation de l'operator, le grand patron qui va s'occuper pour nous de Prometheus dans k8s.

helm install coreos/prometheus-operator --name prometheus-operator --namespace monitoring

dada@master:~/prometheus$ kubectl get pods -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

alertmanager-kube-prometheus-0 2/2 Running 0 37m 10.244.3.140 node3 <none>

kube-prometheus-exporter-kube-state-65b6dbf6b4-b52jl 2/2 Running 0 33m 10.244.3.141 node3 <none>

kube-prometheus-exporter-node-5tnsl 1/1 Running 0 37m 192.168.0.76 node1 <none>

kube-prometheus-exporter-node-fd7pt 1/1 Running 0 37m 192.168.0.49 node3 <none>

kube-prometheus-exporter-node-mfdj2 1/1 Running 0 37m 192.168.0.22 node2 <none>

kube-prometheus-exporter-node-rg5q6 1/1 Running 0 37m 192.168.0.23 master <none>

kube-prometheus-grafana-6f6c894c5b-2d6h4 2/2 Running 0 37m 10.244.2.165 node2 <none>

prometheus-kube-prometheus-0 3/3 Running 1 37m 10.244.1.187 node1 <none>

prometheus-operator-87779759-wkpfz 1/1 Running 0 49m 10.244.1.185 node1 <none>

Notez que si vous êtes, comme moi, sur une connexion ADSL classique, vous aller avoir le temps d'aller faire couler un grand café et d'aller le boire une clope au bec et au soleil. Votre cluster va télécharger beaucoup de pods et sur chaque nœud.

En y regardant bien, on retrouve :

L'installation est vraiment triviale. Le petit bonus de ce billet sera de vous passer une liste de commandes pour admirer le tout dans votre navigateur préféré : Firefox.

Commencez par ouvrir un tunnel SSH sur le port 9090 vers votre master :

ssh -L 9090:127.0.0.1:9090 dada@IPDuMaster

Puis lancez le port-foward :

kubectl port-forward -n monitoring prometheus-prometheus-operator-prometheus-0 9090

Encore un tunnel SSH, sur le 3000 ce coup-ci :

ssh -L 3000:127.0.0.1:3000 dada@IPDuMaster

Et encore un port-forward :

kubectl port-forward $(kubectl get pods --selector=app=grafana -n monitoring --output=jsonpath="{.items..metadata.name}") -n monitoring 3000Vous êtes bons ! Les dashboards sont maintenant accessibles en tapant http://localhost:PORT dans Firefox.

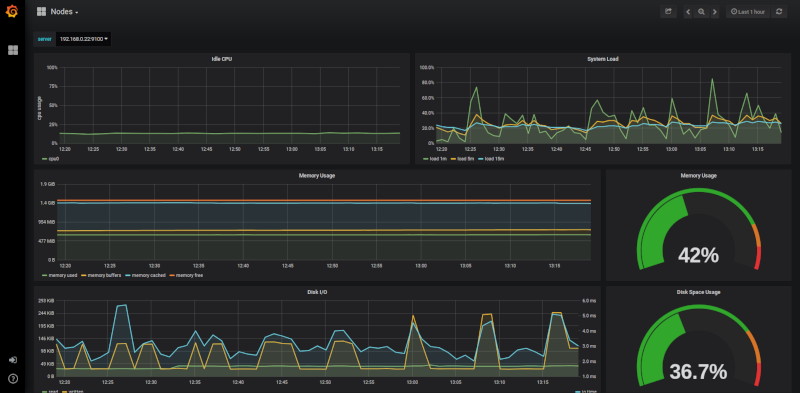

En image, ça devrait donner ça pour Grafana :

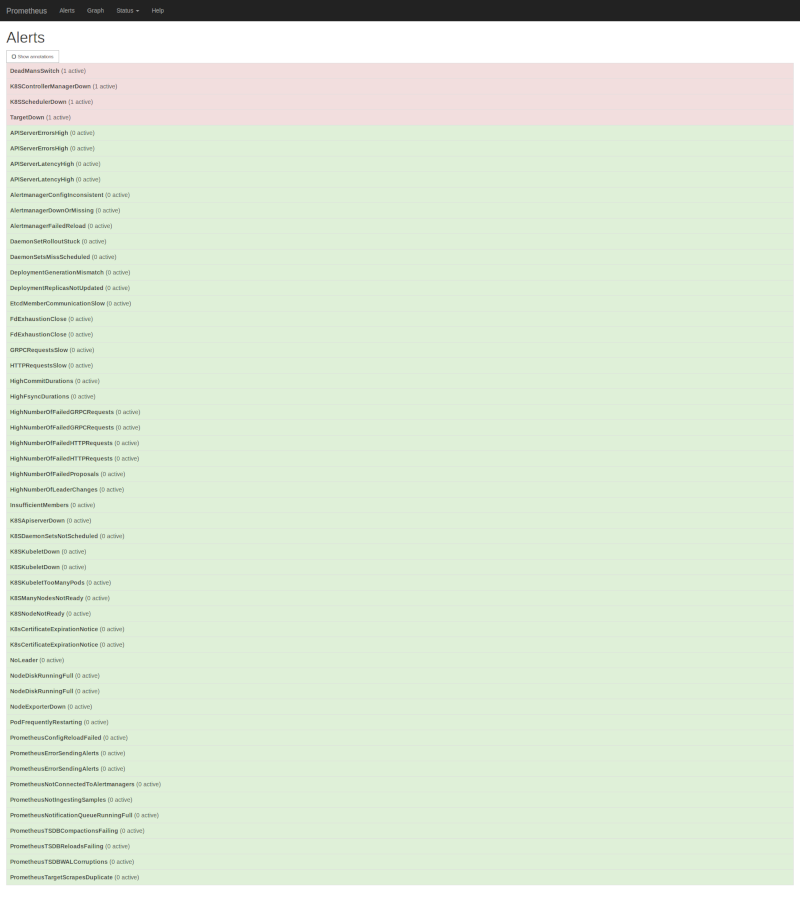

Et ça pour les alertes Prometheus :

Alors, oui. Vous avez aussi remarqué que des alertes étaient déjà levées ? Ce sont des outils/configurations que Prometheus attend de rencontrer dans votre cluster. Le mien n'a pas encore ces histoires de scheduler ou de controller manager. Ça va faire partie des découvertes à suivre dans les futurs billets.

Des bisous !

Rédigé par dada / 11 novembre 2018 / Aucun commentaire

FROM php:7.0-apache

WORKDIR /var/www/html

RUN apt update

RUN apt install -y wget unzip

RUN wget https://git.dadall.info/dada/pluxml/raw/master/pluxml-latest.zip

RUN mv pluxml-latest.zip /usr/src/

#VOLUME

VOLUME /var/www/html

RUN a2enmod rewrite

RUN service apache2 restart

RUN apt-get update && apt-get install -y \

libfreetype6-dev \

libjpeg62-turbo-dev \

libpng-dev \

&& docker-php-ext-install -j$(nproc) iconv \

&& docker-php-ext-configure gd --with-freetype-dir=/usr/include/ --with-jpeg-dir=/usr/include/ \

&& docker-php-ext-install -j$(nproc) gd

# Expose

EXPOSE 80

COPY entrypoint.sh /usr/local/bin/

ENTRYPOINT ["entrypoint.sh"]

CMD ["apache2-foreground"]

#!/bin/bash

if [ ! -e index.php ]; then

unzip /usr/src/pluxml-latest.zip -d /var/www/html/

mv /var/www/html/PluXml/* /var/www/html

rm -rf /var/www/html/PluXml

chown -R www-data: /var/www/html

fi

exec "$@"

docker build -t pluxml-5.6 .

Successfully built d554d0753425

Successfully tagged pluxml-5.6:latest

dada@k8smaster:~/pluxml$ cat pluxml.yaml

apiVersion: v1

kind: Service

metadata:

name: pluxml

labels:

app: pluxml

spec:

ports:

- port: 80

selector:

app: pluxml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: plx-pv-claim

labels:

app: pluxml

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500Mi

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: pluxml

labels:

app: pluxml

spec:

strategy:

type: Recreate

template:

metadata:

labels:

app: pluxml

spec:

containers:

- image: dadall/pluxml-5.6:latest

imagePullPolicy: "Always"

name: pluxml

ports:

- containerPort: 80

name: pluxml

volumeMounts:

- name: pluxml-persistent-storage

mountPath: /var/www/html

volumes:

- name: pluxml-persistent-storage

persistentVolumeClaim:

claimName: plx-pv-claim

dada@k8smaster:~/pluxml$ cat pluxml-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: pluxml-ingress

spec:

backend:

serviceName: pluxml

servicePort: 80

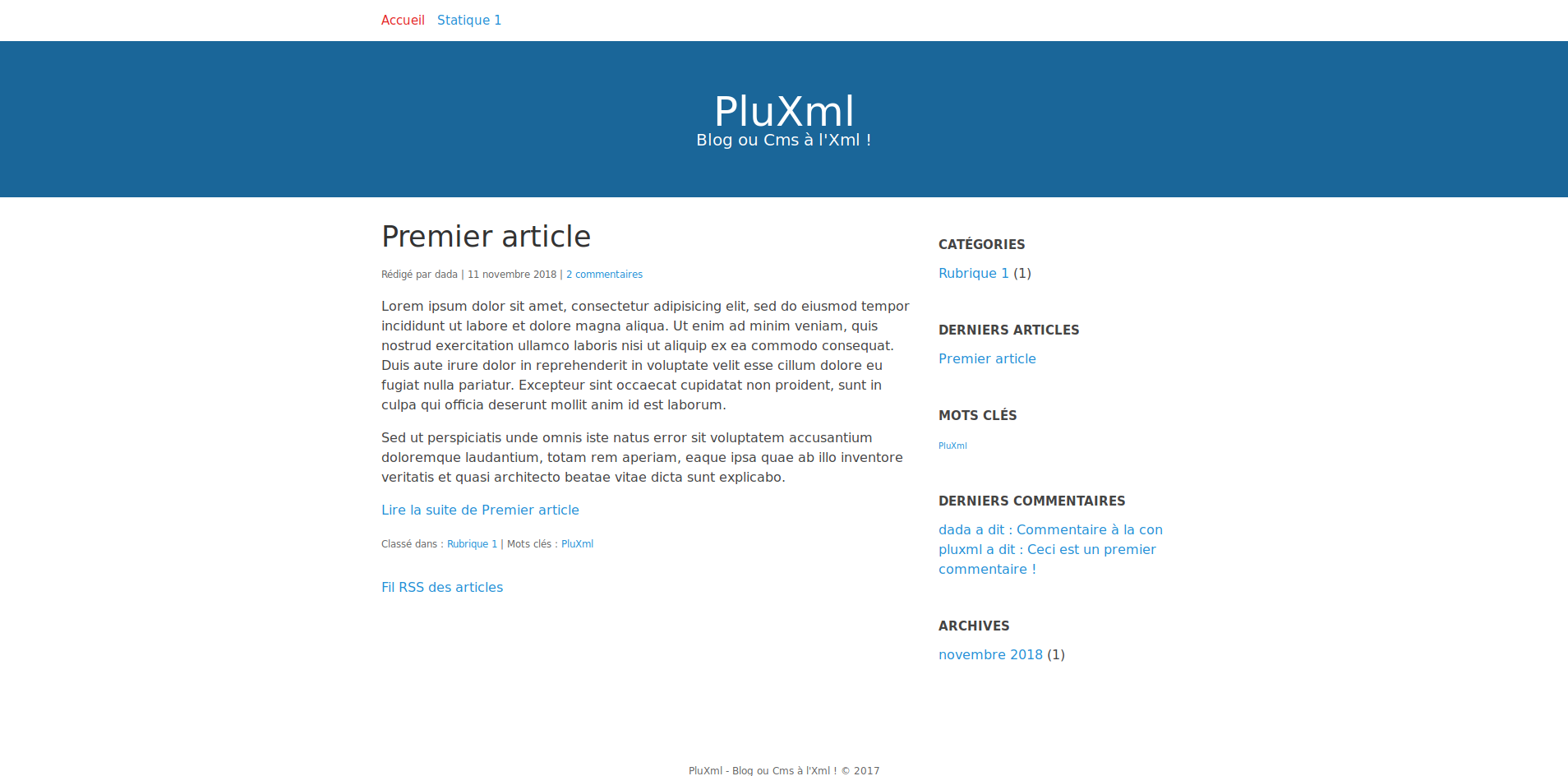

dada@k8smaster:~/pluxml$ kubectl create -f pluxml.yaml

dada@k8smaster:~/pluxml$ kubectl create -f pluxml-ingress.yaml

dada@k8smaster:~/pluxml$ kubectl get pods --all-namespaces -o wide | grep plux

default pluxml-686f7d486-7p5sq 1/1 Running 0 82m 10.244.2.164 k8snode2 <none>

dada@k8smaster:~/pluxml$ kubectl describe svc pluxml

Name: pluxml

Namespace: default

Labels: app=pluxml

Annotations: <none>

Selector: app=pluxml

Type: ClusterIP

IP: 10.100.177.201

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.31:80

Session Affinity: None

Events: <none>

dada@k8smaster:~/pluxml$ kubectl describe ingress pluxml

Name: pluxml-ingress

Namespace: default

Address:

Default backend: pluxml:80 (10.244.1.31:80,10.244.2.164:80)

Rules:

Host Path Backends

---- ---- --------

* * pluxml:80 (10.244.1.31:80,10.244.2.164:80)

Annotations:

kubernetes.io/ingress.class: nginx

Events: <none>